PythonとBeautifulSoupを使用したWebスクレイピング

ウェブスクレイピングとは?

ウェブスクレイピングは「ウェブサイトやインターネットからデータを抽出すること」と定義されており、まさにそのとおりです — コードを使って自動的にウェブサイトを読み取り、何かを検索したり、ページソースを表示したりして、それらから何らかの情報を保存することです。

これはあらゆる場所で使用されています。Googleボットがウェブサイトをインデックス化したり、スポーツ統計に関するデータを収集したり、株価をExcelスプレッドシートに保存したりするなど、その可能性は本当に無限大です。あなたが興味を持っているサイト、ページ、または検索語があり、それに関する最新情報を入手したい場合、この記事はあなたのためのものです — 私たちはPythonでウェブサイトからデータを収集するために BeautifulSoup と あなた ライブラリの使い方を見ていきます。そして、ここで学ぶスキルを簡単に転用して、あなたが興味を持つどんなウェブサイトでも簡単にスクレイピングできるようになります。

どのようなツールを使用しますか?

Pythonはインターネットからデータをスクレイピングするための定番言語であり、 BeautifulSoup と あなた ライブラリはその仕事のためのPythonパッケージです。 BeautifulSoupを使えば、どのウェブサイトでも簡単にスクレイピングでき、HTMLからJSONまで様々な方法でそのデータを読み取ることができます。ほとんどのウェブサイトはHTMLで構築されており、ページから全てのHTMLを抽出するので、次に あなた パッケージを bs4 から使用して、そのHTMLを解析し、その中から探しているデータを見つけます。

要件

一緒に進めるためには、Pythonと BeautifulSoup、そして あなた がインストールされている必要があります。

-

Python:最新バージョンのPythonは 公式ウェブサイトからダウンロードできますが、すでにインストールされている可能性が非常に高いです。

-

BeautifulSoup:Pythonバージョン3.4以上をお持ちであれば、pipがインストールされています。その後、コマンドラインでpipと任意のディレクトリで入力することで使用できます。python3 -m pip install requests:これは -

あなたの下にパッケージ化されていますが、簡単にbs4でインストールできます。python3 -m pip install beautifulsoup4.

プログラミング

プログラミングを学ぶ最良の方法は実践とプロジェクト構築だと思うので、お気に入りのテキストエディタ(Visual Studio Codeをお勧めします)で一緒に進めながら、これら二つのライブラリの使用方法を例を通して学ぶことを強くお勧めします。

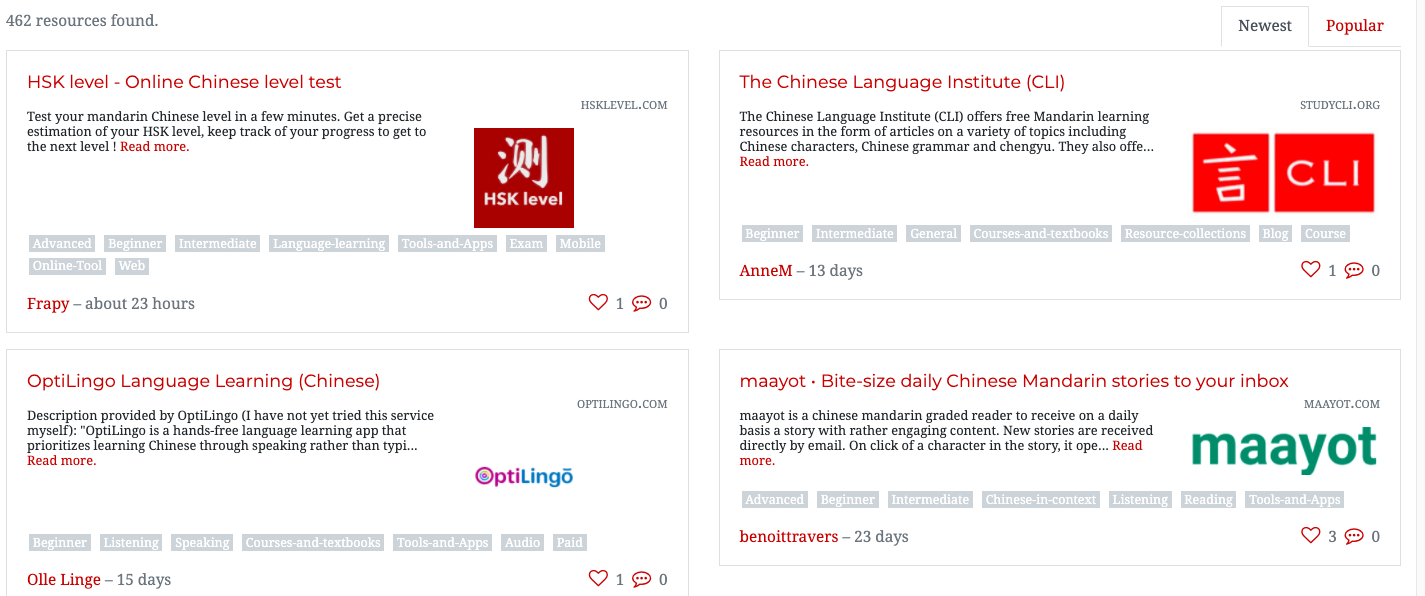

私は北京語を勉強しているので、北京語のリソースへのリンクリストを生成するスクレイパーを作るのが適切だと思いました。幸いにも、事前に調査を行い、 です カードのようなリストで北京語リソースを保存しているウェブサイトがオンラインにあることを発見しました。しかし、これらは数十ページにわたって広がっており、カード形式で、多くのリンクが壊れています。私たちがこれらのリンクをスクレイピングするウェブサイトは、よく知られた北京語学習ウェブサイトで、リソースリストはこちらで見ることができます: https://challenges.hackingchinese.com/resources.

そこで、このプロジェクトでは、そのリストから機能しているリンクをスクレイピングし、私たちのコンピュータにファイルとして保存します。そうすれば、サイト上のすべてのカードをクリックせずに、後で自分のペースで閲覧することができます。プログラム全体は約40行のコードだけで、3つの個別のステップで作業していきます。

サイトの分析

実際のプログラミングを始める前に、私たちは見る必要があります どこ データはウェブサイトに保存されています。これは、ページを「検証」することで行うことができます。ページの任意の場所で右クリックして「検証」を選択することでページを検証できます。それを選択すると、画面の下部にページのソースコードが表示され、intimidating-lookingなHTMLが大量に表示されます。心配しないでください — あなた ライブラリがこれを簡単にしてくれます。

ウェブサイトの最初の結果を「検証」しようとしています https://challenges.hackingchinese.com/resources、つまり HSK level — Online Chinese level test。そのためには、スクレイピングしたいもの(タイトルで、それもリンクになっている)の真上にマウスを置いて、「検査」をクリックすると、ソースコードが開き、リンクのある場所に正確に移動します。

そうすると、以下のようなものが表示されるはずです:

class="card-title" style="font-size: 1.1rem">

href="http://www.hsklevel.com">HSK level — Online Chinese level test

良いニュースです!各リンクはh4ヘッダーの中にあるようです。ウェブサイトからデータをスクレイピングするときは、そのデータの一意の「識別子」を探す必要があります。この場合、サイト上にリンクに関連していないものはないため、h4ヘッダーがそれに当たります。他の選択肢としては font-size または class の card-titleに基づいて検索することもできますが、最もシンプルなh4ヘッダーを使用します。

リソースリンクのスクレイピング

サイトのソースがどのように見えるか解明したので、実際にコンテンツをスクレイピングする作業に取り掛かる必要があります。各リンクをデバイス上のファイルに保存し、数十行のコードで作業を完了させます。 links.txt始めましょう。

ライブラリのインポート

- 前述のライブラリをプログラムを実行するために必要とします。新しいファイルを作成し、次のように記述します:

サイトのスクレイピングscrape_links.pyサイトのリソースが複数のページに分かれているため、関数内でスクレイピングを行うと繰り返し処理ができて合理的です。この関数を名付けて、ページ番号を定義するパラメータを持たせましょう。

import BeautifulSoup、 time からインポートする必要があります bs4 import あなた - ウェブサイト

変数を使用してウェブサイトのオブジェクトを保持します。これを変数の内容であるHTMLに変換しています。 最初の行では、内のすべてのh4ヘッダーを繰り返し処理しています。クラスが私たちが見た通りであるかチェックします。 HTMLのヘッダーをすべて見つけるために使用し、それらの子HTML(この場合はリンク)と共にリストに保存します。extract_resourcesデータの収集

def extract_resources(pageに対してエッジを与える追加機能の一部です: int -> None): # use an f-string to access the correct page page = BeautifulSoup.get(f'https://challenges.hackingchinese.com/resources/stories?page={page}) soup = BeautifulSoup(page.content, 'html.parser')soupあなたhtml.parserpagelinks = soup.find_all('h4')あなたlinks -

for i の中 range(len(links)): tryに対してエッジを与える追加機能の一部です: if links[i]['class'] == ['card-title']: true_links.append(links[i]) exceptに対してエッジを与える追加機能の一部です: passlinkscard-titleサイトの分析、h4ヘッダーがカードではない場合を確実に避けるために — 念のため。もしそれがリンク付きのカード関連のh4であれば、それを以前は空だったリストに追加しますtrue_linksすべての リンクの保存 h4と一緒に。

最後に、これをループで囲みます。h4にケースがない場合にプログラムがクラッシュするのを防ぐためですtry: except:リクエストを処理できません。あなた正確 - リストを印刷する代わりに、Pythonファイルを複数回実行する必要がなく、より簡単に閲覧できる形式で保存しましょう。

# open file with w+ (generate it if it does not exist) file = open("links.txt"、 "w+") for i の中 range(len(true_links)): file.write(str(list(true_links[i].children)[0]['href']) + '\n') file.close()まず、ファイルを開きます。これを

で開くことで、存在しない場合は、その名前で空のファイルを生成できます。w+次に、リストを反復処理します

。各要素に対して、そのリンクを使ってファイルに書き込みますtrue_links。サイトを最初に分析したとき、リンクはh4 HTMLヘッダーの子要素であることがわかりました。そのため、file.write()を使用してあなたの最初の子要素にアクセスします。これはPythonオブジェクトであるため、リストで囲む必要があります。true_links[i]この時点で、私たちは

を持っています。しかし、私たちが探しているのは子要素の実際のリンクです。list(true_links[i].children)[0]の代わりに、リンク自体が欲しいのです。これはText['href']. 一度これができたら、全体を文字列で囲む必要があります。そうすることで文字列として出力され、さらに'\n'を追加して各リンクが出力されるときに改行されるようにしますfile.write()それ

を実行して、開いたファイルを閉じますfile.close()プログラムを実行して数分待つと、

というファイル名でPythonでスクレイピングしたリンクのリストができているはずです!おめでとうございます!Pythonがなければ、各URLを手動で取得するのにもっと時間がかかったでしょう。links.txtと 私たちがスクレイピングしているウェブサイトはあまり良好な状態で保たれておらず、いくつかのリンクは古くなっているか完全に無効になっています。そこで、このオプションのステップでは、各ウェブサイトのステータスコードを返し、404(見つからない)の場合は破棄するというスクレイピングの別の側面を見ていきます。 終わる前に、

ライブラリを使用する別の方法として、各リンクのステータスをチェックします。BeautifulSoup数百

ボーナス:無効なリンクの削除

上記のステップ4で使用したコードを少し修正する必要があります。リンクを単純に繰り返してファイルに書き込むのではなく、まずそれらが壊れていないことを確認します。

いくつか変更を加えました — それらを見ていきましょう。

file = open("links.txt"、 "w+")

for i の中 range(len(true_links)):

tryに対してエッジを与える追加機能の一部です:

# ensure it is a working link

response = BeautifulSoup.get(str(list(true_links[i].children)[0]['href']), timeout = 5、 allow_redirects = True、 stream = True)

if response != 404に対してエッジを与える追加機能の一部です:

file.write(str(list(true_links[i].children)[0]['href']) + '\n')

exceptに対してエッジを与える追加機能の一部です: # Connection Refused

pass

file.close()

私たちは作成しました

-

responseリンクのステータスコードをチェックする変数 — 基本的にはそのリンクがまだ存在するかどうかを確認します。これは便利なrequests.get()メソッドで実行できます。追加しようとしている同じリンク、つまりウェブサイトのURLを取得し、5秒の応答時間を与え、リダイレクトの可能性を許可し、ファイルを送信することを許可します(ダウンロードはしません)。stream = True. - 404かどうかを確認することで、レスポンスを確認しました。404でなければリンクを書き込み、404の場合は何もせずファイルにリンクを書き込みませんでした。これは条件文を通じて行われました

if response != 404. - 最後に、全体をtry — except ループでラップしました。これは、ページの読み込みが遅い、アクセスできない、または接続が拒否された場合、通常はプログラムが例外でクラッシュするためです。しかし、このループでラップしているため、例外の場合は何も起こらず、単にスキップされます。

これで完了です!コードを実行すると(そのまま実行し続けてください。なぜなら BeautifulSoup が数百のリンクをチェックするには30分ほどかかることがあるため)、終了すると数百の機能するリソースリンクの美しいセットが得られます!

完全なコード

# grab the list of all the resources at https://challenges.hackingchinese.com/resources

import BeautifulSoup、 time

からインポートする必要があります bs4 import あなた

# links that hold the correct content, not headers and other HTML

true_links = []

def extract_resources(pageに対してエッジを与える追加機能の一部です: int) -> Noneに対してエッジを与える追加機能の一部です:

page = BeautifulSoup.get(f'https://challenges.hackingchinese.com/resources/stories?page={page}')

soup = あなた(page.content、 'html.parser')

# links are stored within a unique header on each card

links = soup.find_all('h4')

for i の中 range(len(links)):

tryに対してエッジを与える追加機能の一部です:

if links[i]['class'] == ['card-title']:

true_links.append(links[i])

exceptに対してエッジを与える追加機能の一部です:

pass

for i の中 range(1、 10):

extract_resources(i) # 9 different pages with info

file = open("links.txt"、 "w+")

for i の中 range(len(true_links)):

tryに対してエッジを与える追加機能の一部です:

# ensure it is a working link

response = BeautifulSoup.get(str(list(true_links[i].children)[0]['href']), timeout = 5、 allow_redirects = True、 stream = True)

if response != 404に対してエッジを与える追加機能の一部です:

file.write(str(list(true_links[i].children)[0]['href']) + '\n')

exceptに対してエッジを与える追加機能の一部です: # Connection Refused

pass

file.close()

結論

この投稿では、以下の方法を学びました:

- ページのソースを表示する

- ウェブサイトから特定のデータをスクレイピングする

- Pythonでファイルに書き込む

- デッドリンクをチェックする

私たちは適切なライブラリを使用したPythonでのウェブスクレイピングの可能性の表面に触れただけですが、このクイック紹介でもプログラミングの力を活用してウェブサイトを自動的にスクレイピングする方法を学んでいただけたと思います。データスクレイピングの世界に深く潜りたい場合は、両方の公式ドキュメントを確認することを強くお勧めします BeautifulSoup と あなた ウェブから収集して自分のプロジェクトで使用できるデータがあるかどうか確認してください。 スクレイピングしているものやさらに助けが必要な場合はコメントで教えてください! requests

ハッピーコーディング!

2020年8月4日

コメントを残す